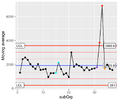

If I’m interpreting this correctly, I’ve seen this many times. In different guises. It is essentially a guardband especially when the sample size is low and the test is destructive. It’s an attempt to ensure that the entire distribution is above the actual specification lower limit. It isn’t well thought out tho.

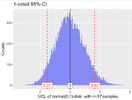

And in all honesty I’ve used a version of this for destruct tests on large batches and it is applied for each lot or batch. But you really need more than a single sample - depending on the amount of variation (within lot standard deviation) you need 10-30 samples. This applicable when the within lot variation is stable and it is the average that moves around from lot to lot (in other words a truly non-homogenous process). If the within lot variation isn’t stable you need a slightly different plan. You are essentially estimating the lot average and SD to ensure that the lot is above spec.

Using a single sample that ‘just meets’ the LCL doesn’t guarantee that the lot meets the actual lower spec. And doing it only once per year per tool is silly. But it usually makes those who know very little about statistics and physics feel better.

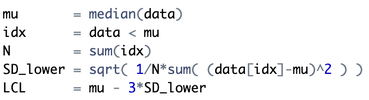

The OP could take their last pre-production sample (the one that will be implemented in mass production) calculate the mean and SD of the 125 pieces, and set the guardband at the average minus 3SD ( it isn’t a ‘LCL’ OR a spec. It’s a guardband. Words have meanings and we should use them not change them to fit our misinterpretation of the world). If I was forced to stay with an annual sample of 1 I would set the guardband at the spec PLUS 3SD. Which would be ultra conservative for a sample of 1.

And in all honesty I’ve used a version of this for destruct tests on large batches and it is applied for each lot or batch. But you really need more than a single sample - depending on the amount of variation (within lot standard deviation) you need 10-30 samples. This applicable when the within lot variation is stable and it is the average that moves around from lot to lot (in other words a truly non-homogenous process). If the within lot variation isn’t stable you need a slightly different plan. You are essentially estimating the lot average and SD to ensure that the lot is above spec.

Using a single sample that ‘just meets’ the LCL doesn’t guarantee that the lot meets the actual lower spec. And doing it only once per year per tool is silly. But it usually makes those who know very little about statistics and physics feel better.

The OP could take their last pre-production sample (the one that will be implemented in mass production) calculate the mean and SD of the 125 pieces, and set the guardband at the average minus 3SD ( it isn’t a ‘LCL’ OR a spec. It’s a guardband. Words have meanings and we should use them not change them to fit our misinterpretation of the world). If I was forced to stay with an annual sample of 1 I would set the guardband at the spec PLUS 3SD. Which would be ultra conservative for a sample of 1.